I predict a riot.

If our nearest continental neighbour is anything to go by, typically, looting follows a riot. And France knows a thing or two about rioting. But in the world of intellectual property, you can turn this received wisdom on its head. It may reasonably be argued that in our tiny outpost world of the intangible asset, the looting caused the riot. Let me explain.

Away with the fairies

There are questionable lawsuits and there’s just plain ‘kite flying’. It’s difficult to categorise a recent claim brought in the States against several of the big AI business players. I’ll run it by you anyway. Take a breath. This is a tad unusual.

Thousands of claimants in a class action have asserted a range of AI businesses robbed them blind and that AI could end the world because of their reckless behaviour. Well, not quite in those words but not far off. The filing stated:

“… the Defendants’ disregard for privacy laws is matched only by their disregard for the potentially catastrophic risk to humanity,”

So, is the sky falling in and just what colour is the sky anyway in the world of AI?

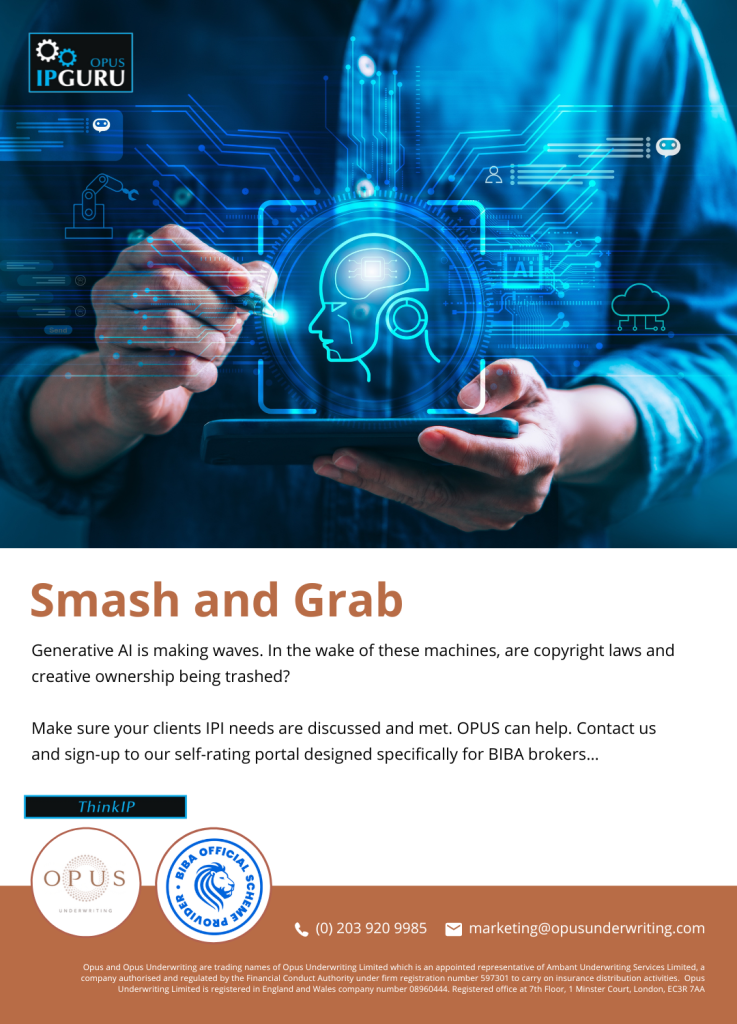

Generative AI is the new Japanese Knotweed

AI is about as unwelcome with creative types as the well-known Nipponese garden invader is with property surveyors. Authors, artists, and many others are filing lawsuits like billyo against generative AI companies including Microsoft, Meta and Open AI, for allegedly rifling through their data and back catalogues (snouts in a virtual trough) and exploiting their work without permission, credit, or compensation. The suit states the platforms; “massively misappropriate protected imagery”.

Just recently comedian Sarah Silverman joined authors Christopher Golden and Richard Kadrey in suing Open AI and Meta. According to The Week magazine, they say:

“…the companies used pirated copies of their books to train their chatbots. The authors claim the companies scraped their copyrighted data from “shadow library” websites like Bibliotik, Library Genesis and Z-Library.”

Feeding frenzy

If you stop and think about it, it makes sense. It’s like shoving branches into a chipper. Imagine a whole forest of trees eaten raw by a machine with a bottomless chip-catching capacity. The AI platforms must learn and be fed data to do their job of imitation. This requires an extra-ordinary amount of data. Buckets of it, skip loads of it even, from all over the internet and from all sources. This is the looting that led to the riot.

Rare earth elements

Let’s take an example. You want to an AI platform to write an article on soil samples in the style of Jeremy Clarkson. Yes – apparently that’s possible. If Jeremy won’t write it (and cheaply) an AI powered robot will. But it’s only possible if every article and book Clarkson has written and published is devoured by AI to reproduce his style as ‘faithfully’ as possible. The cold soil sample data is probably open source – Clarkson’s work probably isn’t and, rightly, he may well object to the ploughing and plunder of his material. IP ownership and copyright issues should be weighed. The thing is, right now, they’re not being weighed very much if at all.

Scraping the barrel

Just as bottom trawling destroys sea life, AI chatbot training savagely assaults creative ownership. Copyrights are allegedly infringed like by-catch. Willy-nilly and without a care as the need to teach and feed AI platforms marches on, grotesquely, but steadily. To date, only Shutterstock is an outlier trying to compensate human creators. It’s a start.

More usually it’s fair to describe current generative AI platforms as being in the Silver Dollar saloon of Deadwood, standing accused and surrounded by legal gun-slingers, like a black-hatted common cardsharp – bar stools flying all around. Hence the queue of artists with means who are kicking off. And some without means but with union backing like the 11,500 strong Writer’s Guild of America now on strike. Kind of puts their action into perspective, doesn’t it? Canada’s Guild may shortly follow. Artistic reaction to this existential threat isn’t going away any time soon.

Is it that Hollywood and big network producers see the opportunity to have the product of creative talent without having to pay for it, or manage it and its pushy agents? Could it be that simple and that shallow? Likewise for the music industry? Sad, if true.

The beast within

Authors are arguing in court their books are being unlawfully “ingested” to train AI platforms. The model underlying ChatGPT is trained with data that is publicly available on the internet.

Complaints are being filed saying that AI platforms “unfairly” profit from “stolen writing and ideas”.

You can see why many authors believe this.

Early days

To win such actions, specific and detailed proof of copyright breach is needed and a demonstration that the defence of “fair use” is not applicable (not a defence available in the UK). Technically more difficult than it seems, early filings are in jeopardy of being ‘tossed’ out by U.S. judges for lack of particularity.

Later law suits will learn from this, adjust, endure, and possibly win. Lawyers can learn and evolve too. Their intelligence is real – not artificial. The legal system will catch up. The AI platforms have merely experienced the opening salvo or two. This is a war not a battle.

There will be so much more to come, particularly if the AI platforms themselves become increasingly secretive in their methodology as recent evidence presented in court actions suggests. Getty Images is lining up to have a go. That should be interesting. A slow-motion wrestling match between inflated giants. Winner takes all. Getty images has every right to protect its business model and the stock it fairly purchased to legally exploit, just as individual creatives have every right to protect and exploit their IP. They own it.

Ebony and ivory…

Unusually, lawyers and scientists agree on one key issue. The team of lawyers representing a couple of author claimants recently observed:

It is “ironic” that “so-called ‘artificial intelligence’” tools rely on data made by humans. “Their systems depend entirely on human creativity. If they bankrupt human creators, they will soon bankrupt themselves.”

New Scientist (24 June 2023) reported:

“Ilia Shumailov at the University of Oxford and his colleagues simulated how AI models would develop if they were trained using the output of other AI’s. They found that the models would become heavily biased, overly simplistic and disconnected from reality – a problem they called model collapse.”

So, pretty useless then, if they keep learning from other AI’s. To survive and thrive, it’s essential to mine human talent. And for that, their masters must pay the going price and ask nicely.

Anyone want a (not very) humorous article to read on soil samples…?

Murray Fairclough

Development Underwriter

OPUS Underwriting Limited

+44 (0) 780 145 9940

underwriting@opusunderwriting.com